AWS Hybrid DNS with Terraform

Last updated: July 05, 2022

Overview

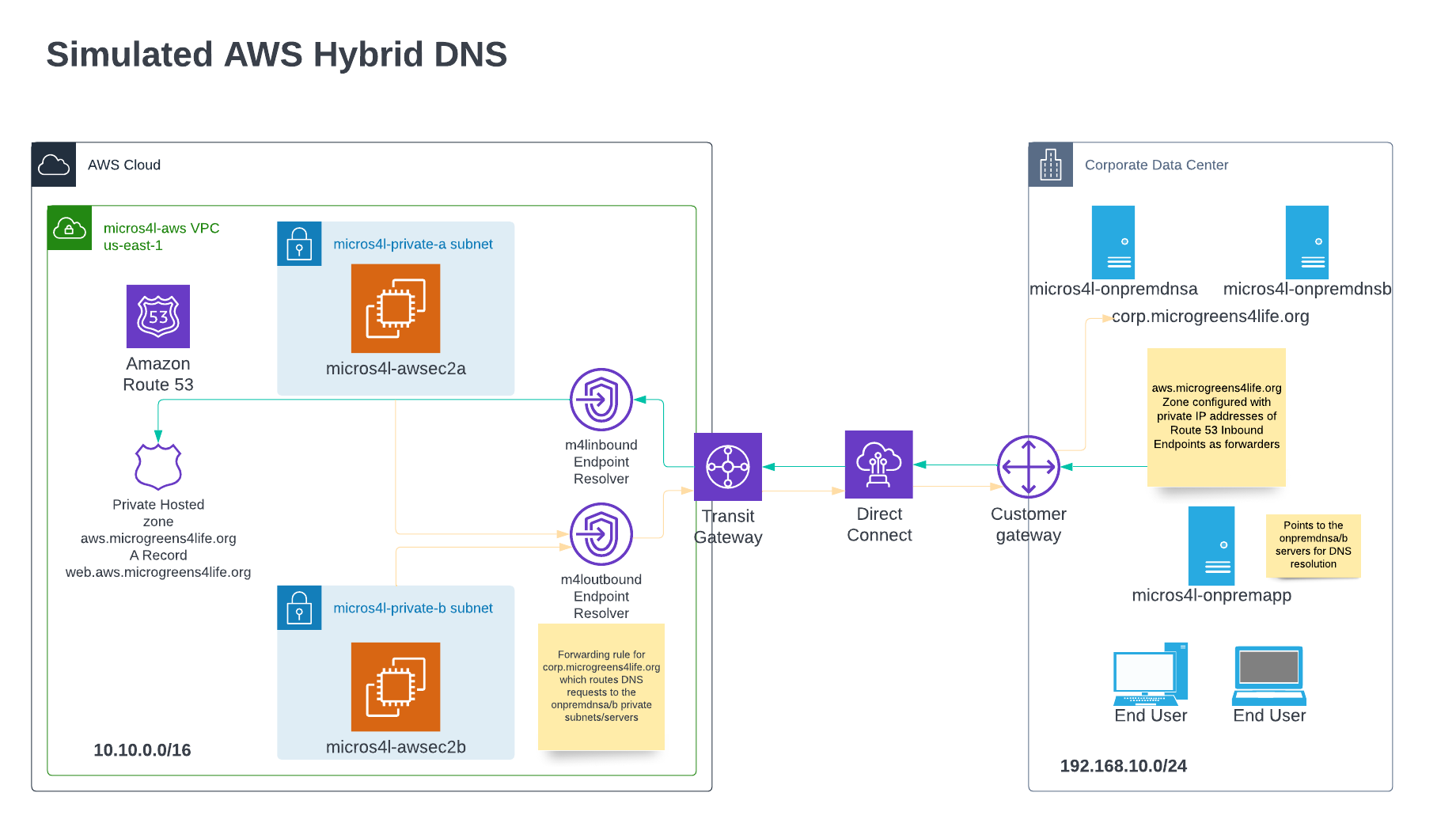

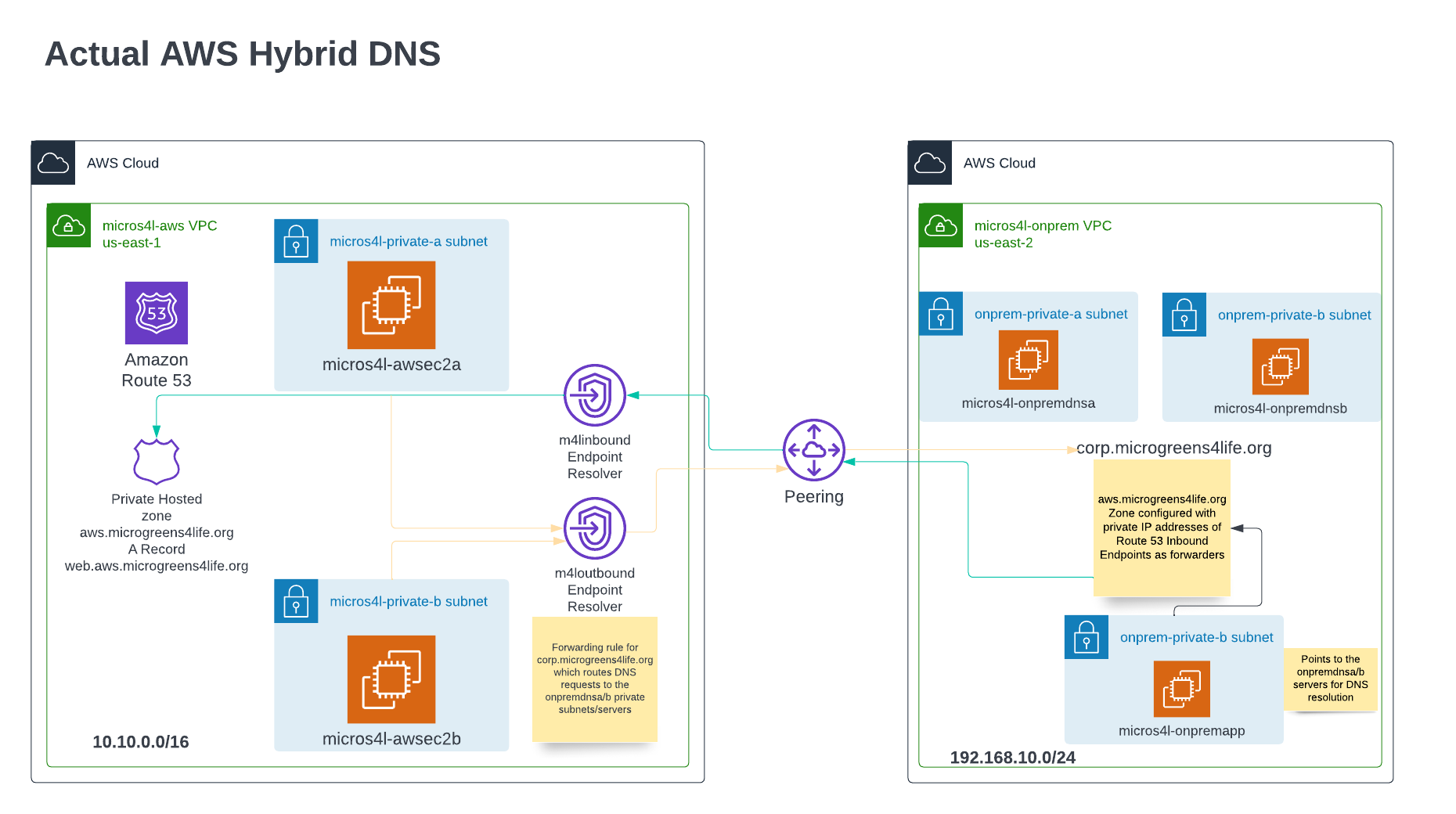

The purpose of this runbook is to demonstrate the implementation of an AWS Hybrid DNS design and architecture between an AWS region hosting private only subnets and an on-prem private corporate data center. The intent of this design is to simulate a hybrid DNS cloud connectivity setup to an on-prem environment using AWS DirectConnect (DX) however, the actual implementation will provide private DNS resolution over an established inter-region AWS VPC Peering connection through various Route 53 services and Linux Bind DNS server components as detailed below.

Pre-requisites

Design Artifacts

- YouTube demo recording

- Diagram: Simulated AWS Hybrid DNS Network Design Architecture

- Diagram: Actual AWS Hybrid DNS Network Design Architecture

- Clone/Fork the repo containing the Terraform artifacts for the AWS Hybrid DNS design here: jksprattler/aws-networking

- relevant files are under

/aws-terraform-hybrid-dns

- relevant files are under

- Set up integrated DNS resolution for hybrid networks in Amazon Route 53 - AWS Prescriptive Guidance

Diagrams

Architecture

The us-east-2 region will be hosting the micros4l-onprem VPC with a prefix of 192.168.10.0/24 also containing 2 private subnets. Two t2.micro EC2 instances will be deployed here in separate subnet/availability zones called micros4l-onpremdnsa/b and configured with Bind and the zone corp.microgreens4life.org. Additionally, the zone for aws.microgreens4life.org will be configured on these servers with forwarders set to the AWS Inbound endpoint IP addresses in order to forward requests for the Web subdomain to the AWS Route 53 resolvers. A third basic t2.micro EC2 instance called micros4l-onpremapp will be deployed which we'll use for testing DNS resolution from "on-prem" to AWS (us-east-1).

The us-east-1 region will be hosting the micros4l-aws VPC with a prefix of 10.10.0.0/16 containing 2 private subnets - note the non-overlapping private IP space between the environments as this is a requirement for VPC peering in addition to DirectConnect (DX) connectivity which is being simulated. Two basic t2.micro EC2 instances, micros4l-awsec2b/b will be deployed here for testing DNS resolution into our simulated Corporate on-prem datacenter. Each instance is deployed in a separate subnet/availability zone. The Route 53 private hosted zone for aws.microgreens4life.org with an A record of web.aws.microgreens4life.org is configured here. Additionally, Route 53 Inbound and Outbound endpoints are hosted here where each endpoint gets associated with both of the us-east-1 private subnets. The Outbound endpoint will have a forwarding rule for the corp.microgreens4life.org zone associated with it which targets the micros4l-onpremdnsa/b Bind servers hosted "on-prem" (us-east-2) to resolve outbound requests to the Corporate subdomains.

All instances will be deployed with settings configured to allow Systems Manager connectivity as this is the only way to connect to these private instances in this environment as none of them will be deployed with a public IP address nor is there any internet gateway created - these are completely isolated, private environments.

Procedure

- Navigate to the

/global/iamdirectory and run terraform plan/apply:cd aws-terraform-hybrid-dns/global/iam terraform plan terraform applyResources deployed in this terraform module:

roles.tf- IAM instance policy, roles and policy attachments which all EC2 instances in this design will utilizes3.tf- S3 bucket for storing the terraform state files. Update your bucket name here as it must be globally unique

- Navigate to the

/us-east-2directory and run terraform plan/apply:cd aws-terraform-hybrid-dns/us-east-2 terraform plan terraform applyResources deployed in this terraform module:

ec2.tf- micros4l-onpremdnsa/b simulating on-prem Linux Bind/DNS servers and micros4l-onpremapp Linux servervpc.tf- VPC with prefix 192.168.10.0/24, 2x private subnets, private route table associated with the 2x subnets, Security Group and rules allowing SSM access and DNS requests, VPC Endpoints for SSM connectivity

- Capture the outputs from the

/us-east-2module deployment and save them in a temp text file for use as input in the next step. For example:onprem-private-rt_id = "rtb-0fae5266503453da8" onpremdnsa_ip = "192.168.10.11" onpremdnsb_ip = "192.168.10.236" onpremvpc_id = "vpc-0ab74c12320891aa3" - Navigate to the

/us-east-1directory and run terraform plan/apply:cd ../us-east-1 terraform plan terraform applyResources deployed in this terraform module:

ec2.tf- micros4l-awsec2a/b AWS instancesroute53.tf- aws.microgreens4life.org Route 53 hosted private zone, web.aws.microgreens4life.org A record, Route 53 Inbound endpoint, Route 53 Outbound endpoint for the corp.microgreens4life.org domain with a Forwarding rule pointing to the Corp on-prem environment (us-east-2)vpc.tf- VPC with prefix 10.10.0.0/16, 2x private subnets, private route table associated with the 2x subnets, VPC Peering connectivity between the AWS us-east-1 region to the "on-prem" us-east-2 region, Security Group and rules allowing SSM access and DNS requests, VPC Endpoints for SSM connectivity

- Capture the outputs from the

/us-east-1module deployment and save them in a temp text file for use as input in the next step. Note you'll only need the "ip" address output from each of the 2 endpoints. For example:aws_route53_resolver_inbound_endpoint_ips = toset([ { "ip" = "10.10.0.90" <---- INBOUND_ENDPOINT_IP1 "ip_id" = "rni-2bc122c23384d09af" "subnet_id" = "subnet-0e6a97614d0833b47" }, { "ip" = "10.10.10.221" <---- INBOUND_ENDPOINT_IP2 "ip_id" = "rni-75c2ecfc30094b3a9" "subnet_id" = "subnet-0dce015d7ba12e0de" }, ]) - In the awszone.forward file, replace the

INBOUND_ENDPOINT_IP1andINBOUND_ENDPOINT_IP2values of the forwarders with the Endpoint IP addresses from the outputs of the previous step. - From the AWS console, navigate to the EC2 instances in the us-east-2 region, select

micros4l-onpremdnsaand initiate a connection to it via Session Manager. Entersudo -iand with your editor of choice, vi or nano into the/etc/named.conffile. Scroll to the end of the file and paste the contents of your updated awszone.forward file, save and exit. Run the following command to restart bind service:systemctl restart named systemctl status namedUsing dig or nslookup, test that your local DNS server is resolving the AWS Route 53 private zone/domain for

aws.microgreens4life.orgnow that you've applied the Route 53 endpoint IP addresses into the Bind server's named.conf file. You should see it resolve to the 10.10.x.x private IP space of the us-east-1 AWS VPC where the Route 53 inbound endpoints are hosted. For example:sh-4.2$ dig web.aws.microgreens4life.org @127.0.0.1 +short 10.10.0.172 10.10.10.31Repeat above steps for

micros4l-onpremdnsbFrom the AWS console, navigate to the Route 53 service in the us-east-1 region and validate the A record hosted in your private Route 53 zone is using the same IP addresses that your dns server in us-east-2 just resolved to. - Navigate to the EC2 instances in us-east-2 and select

micros4l-onpremappand initiate a connection to it via Session Manager. Entersudo -iand with your editor of choice, vi or nano into the/etc/sysconfig/network-scripts/ifcfg-eth0file. Scroll to the end of the file and paste the following contents replacing theTHE_PRIVATE_IP_OF_ONPREM_DNS_A/Bvalues with the actual private IP addresses of the onprem DNS servers which were given in the outputs of your terraform apply for the us-east-2 implementation in step 3.):DNS1=THE_PRIVATE_IP_OF_ONPREM_DNS_A DNS2=THE_PRIVATE_IP_OF_ONPREM_DNS_BRestart the network services:

systemctl restart networkRun a test ping/dig from themicros4l-onpremappinstance to the AWS route 53 hosted subdomain:ping web.aws.microgreens4life.org dig web.aws.microgreens4life.org +short - Navigate back to the ec2 instances in us-east-1 and initiate a systems manager session on micros4l-awsec2a/b and test dns resolution of the onprem hosted subdomain:

ping app.corp.microgreens4life.org dig app.corp.microgreens4life.org +short - Cleanup! Run a terraform destroy in each region/module starting with

us-east-1- Note I configured ignore lifecycle rules on the accepter_route_table_id and accepter_vpc_id prompts so just hit Enter here to bypass these. You'll need to input the onpremdnsa/b_ip private IP's as I couldn't get a lifecycle rule to work here:terraform destroy var.accepter_route_table_id Route table id of the accepter that you want to peer with it Enter a value: <Enter> var.accepter_vpc_id VPC id that you want to peer with it Enter a value: <Enter> var.onpremdnsa_priv_ip Private IP Address of micros4l-onpremdnsa Enter a value: 192.168.10.53 <-----onpremdnsa_ip var.onpremdnsb_priv_ip Private IP Address of micros4l-onpremdnsb Enter a value: 192.168.10.243 <-----onpremdnsb_ipDo the same for

us-east-2- No prompts for input on this one, simply justterraform destroyit. I've left the/global/iamresources in tact since it's just an IAM role/policy and S3 bucket storing my terraform state files.

Summary

Upon completion of the above procedure, you should now have 2 separate private environments with fully integrated DNS resolution between them. The private AWS VPC instances in us-east-1 are successfully resolving the Corporate subdomain hosted in the private "on-prem" VPC in us-east-2 via the outbound endpoint and forwarding rule rule for the Corporate domain which gets routed via the VPC Peering connection. Conversely, the private "on-prem" instances in us-east-2 are successfully resolving the web subdomain hosted in the private AWS VPC us-east-1 via the inbound endpoint resolver.

Note the original idea for this design came from Cloud Trainer, Adrian Cantrill. You can find the CFT stack, procedure steps, and videos for his lab here

Differences between deployments where I:

- Coded all infrastructure steps in Terraform including vpc peering, vpc peering inter region routes, route 53 inbound and outbound endpoints and forward rules, etc. instead of CloudFormation (

HybridDNS.yaml) used for initial/base infrastructure. - Coded outputs.tf files to provide values for the input variable strings for the deployments to the separate regions/modules to prevent needing to hunt down id's and ip addresses from within the AWS console (ie vpc peering id, route table id for peering connection, onpremdnsa/b private ip addresses for the aws zone file)

- Deployed the simulation of the on-prem / DX connected environment in a completely separate region (us-east-2) instead of all in the same us-east-1 region in an attempt to increase complexity, validate inter-region vpc peering works with DNS resolution against private Route 53 endpoints and just for overall better visualization of connectivity between 2 isolated environments/regions

- Chose microgreens4life for my Domain/zone instead of animals4life - nothing against animals, I just really love microgreens. I also replaced all resource names in my code with my variation of m4l/micros4l/microgreens4life which allowed me the opportunity to deeply review the code line by line so I wasn't just copy/pasting pieces of Adrian's CFT stack code (ie a4l/animals4l/animals4life).